Abstract

To use FastCAV with Captum, install the package. Then follow the example below to use FastCAV as a drop-in replacement for the default TCAV classifier:

import torchvision

from captum.concept import TCAV

from captum.attr import LayerIntegratedGradients

from fastcav import FastCAVCaptumClassifier

# Load your model

model = torchvision.models.googlenet(pretrained=True)

model = model.eval()

# Replace the default classifier with FastCAV

clf = FastCAVCaptumClassifier()

layers = ['inception4c', 'inception4d', 'inception4e']

# Use with TCAV as usual

tcav = TCAV(

model=model,

layers=layers,

classifier=clf, # Use FastCAV instead of default classifier

layer_attr_method=LayerIntegratedGradients(

model, None, multiply_by_inputs=False

)

)

# Continue with your TCAV workflow...

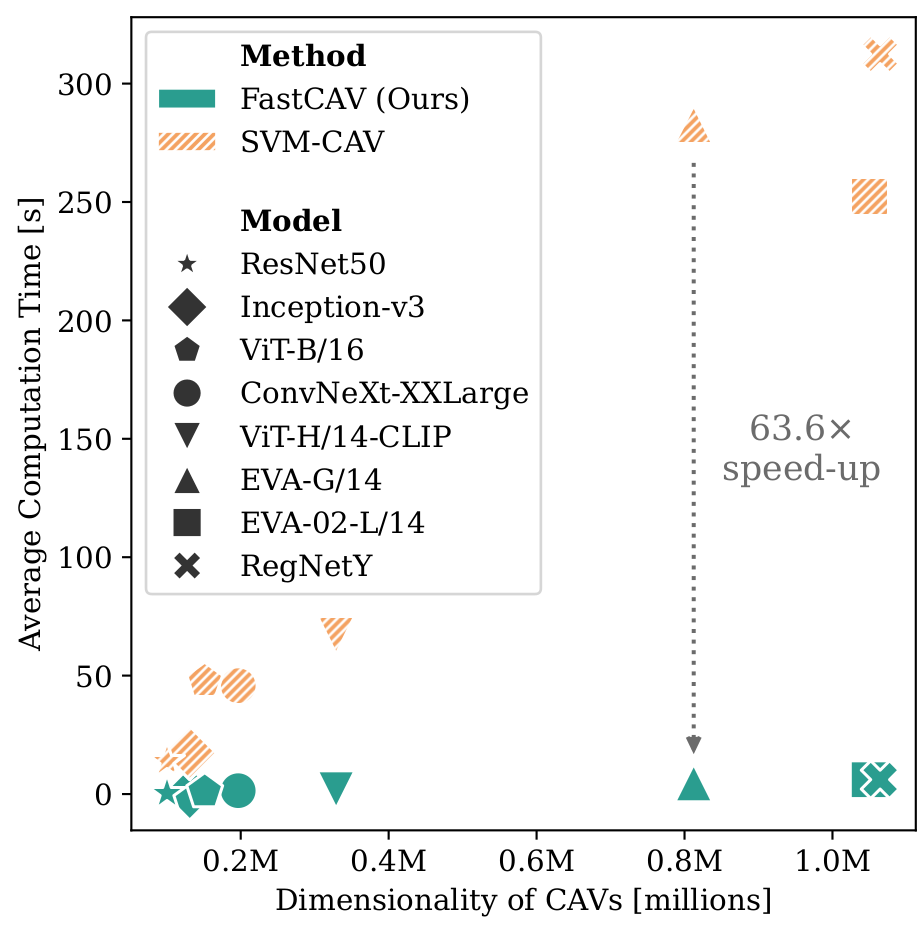

To empirically compare FastCAV with SVM-based computation, we evaluate a broad spectrum of model architectures trained on ImageNet. We split our investigation into four dimensions: computational time, accuracy, inter-method similarity, and intra-method robustness.

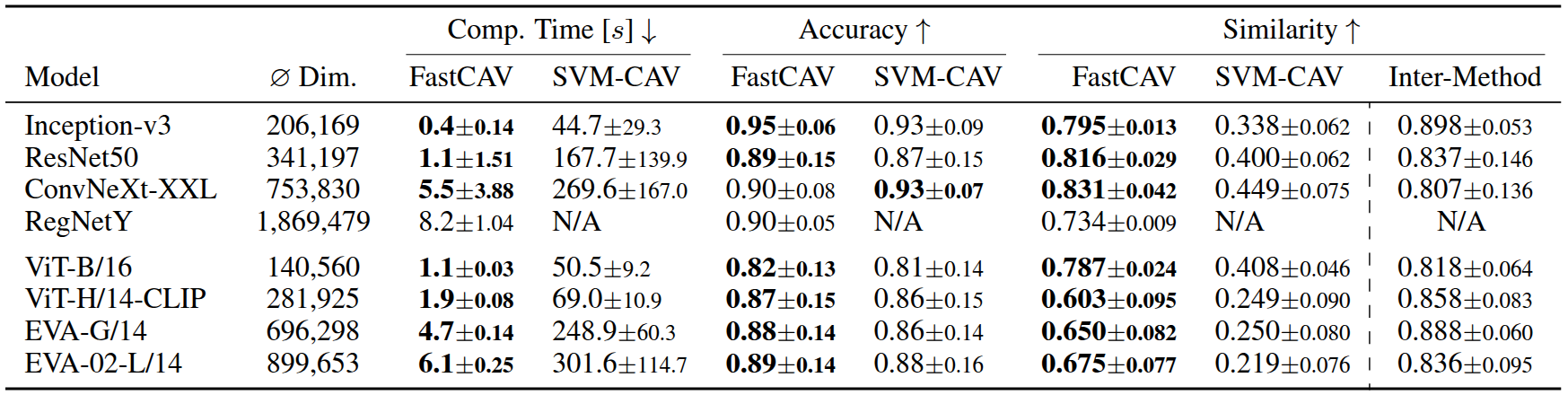

Comparing our approach FastCAV with SVM-based computation. Bold values indicate better results. "N/A" indicates that no results were produced due to the overall computational time exceeding four days. More details can be found in our paper!

FastCAV can act as a more efficient drop-in replacement for downstream applications of CAVs. Examples include Testing with Concept Activation Vectors (TCAV) or Automatic Concept-based Explanations (ACE).

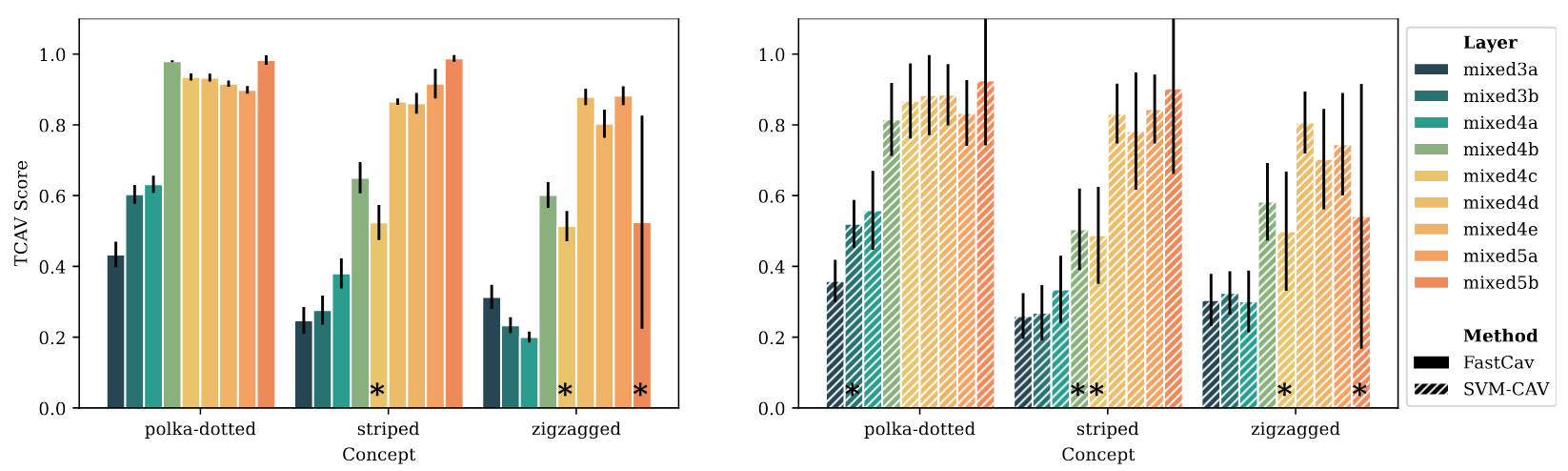

TCAV scores for various GoogleNet layers. We compare the concepts "polka-dotted", "striped", and "zigzagged" for the class ladybug using FastCAV against the established SVM approach. We mark CAVs that are not statistically significant with "*". The insights into the GoogleNet model are consistent between both our approach and the SVM-based method. Nevertheless, we observe lower standard deviations and faster computation speed for FastCAV.

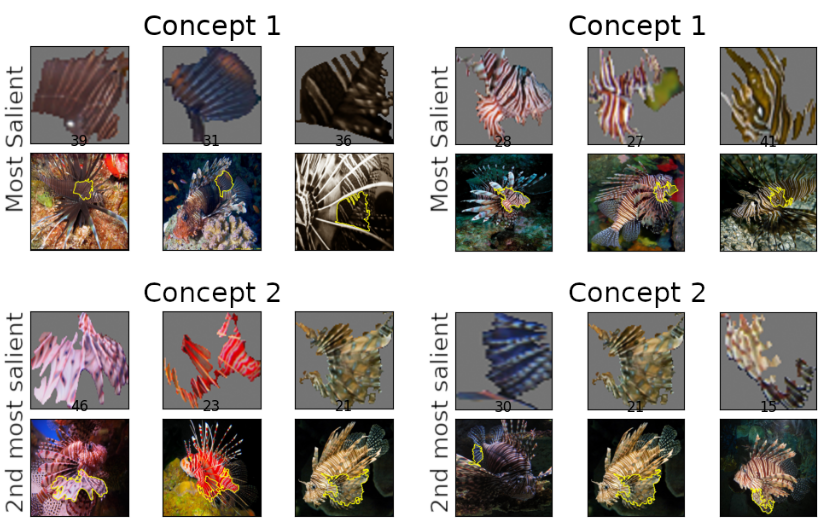

Comparison of the most salient concepts discovered by ACE using either our FastCAV or the established SVM-CAV. Here, we use class lionfish and display the two most salient concepts. We find the discovered patches between both approaches similar and congruent with the original observation in (Ghorbani et al., 2019).

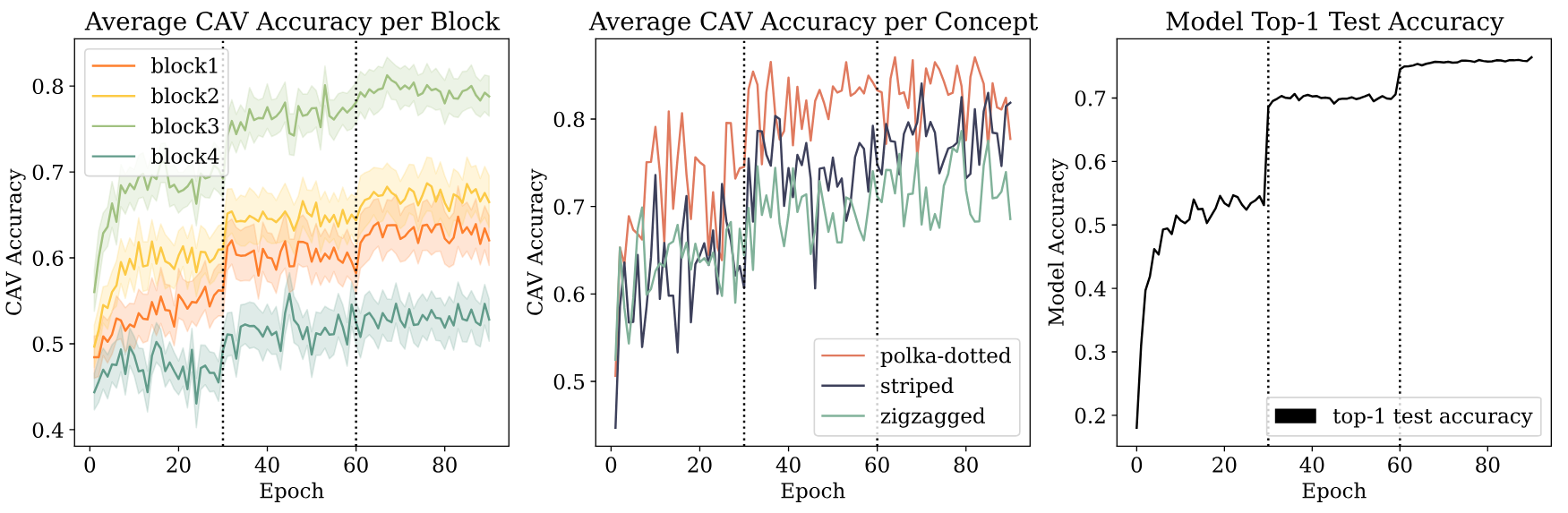

FastCAV enables previously infeasible analyses for models with high dimensional activation spaces. To show this, we train a ResNet50 on ImageNet from scratch and track the evolution of learned concepts during training.

We find the learning of concepts aligns with a simultaneous increase in predictive performance, suggesting that the model is learning to recognize and utilize relevant information for predictions. We observe similar trends for specific concept examples, although the results exhibit increased variability across the training steps compared to the average across concepts. Notably, we observe stark increases in average CAV accuracy after each epoch, where the learning rate is reduced during training.

Furthermore, we observe that early and middle layers have a higher likelihood of learning textures compared to later layers, supporting previous findings (Kim et al., 2018; Ghorbani et al., 2019; Bau et al., 2017). Our observations demonstrate that FastCAV can be used to study the learning dynamics of deep neural networks in a more fine-grained manner and for abstract concepts.

If you find our work useful, please consider citing our paper!

@inproceedings{schmalwasser2025fastcav,

title={FastCAV: Efficient Computation of Concept Activation Vectors for Explaining Deep Neural Networks},

author={Laines Schmalwasser and Niklas Penzel and Joachim Denzler and Julia Niebling},

booktitle = {Proceedings of the 42nd International Conference on Machine Learning (ICML)},

year = {2025},

url={}

}